Configuring IP Whitelisting for OpenStack Load Balancer using Terraform on WEkEO Elasticity

This guide explains how to configure IP whitelisting (allowed_cidrs) on an existing OpenStack Load Balancer using Terraform. The configuration will limit access to your cluster through load balancer.

What We Are Going To Cover

Get necessary load balancer and cluster data from the Prerequisites

Create the Terraform Configuration

Import Existing Load Balancer Listener

Run terraform

Test and verify that protection of load balancer via whitelisting works

Prerequisites

No. 1 Account

You need a WEkEO Elasticity hosting account with access to the Horizon interface: https://horizon.cloudferro.com.

No. 2 Basic parameters already defined for whitelisting

See article Configuring IP Whitelisting for OpenStack Load Balancer using Horizon and CLI on WEkEO Elasticity for definition of basic notions and parameters.

No. 3 Terraform installed

You will need version 1.50 or higher to be operational.

For complete introduction and installation of Terrafom on OpenStack see article Generating and authorizing Terraform using Keycloak user on WEkEO Elasticity

No. 4 Unrestricted application credentials

You need to have OpenStack application credentials with unrestricted checkbox. Check article How to generate or use Application Credentials via CLI on WEkEO Elasticity

The first part of that article describes how to have installed OpenStack client and connect it to the cloud. With that provision, the quickest way to create an unrestricted application credential is to apply the command like this:

openstack application credential create cred_unrestricted --unrestricted

That would create an unrestricted credential called cred_unrestricted.

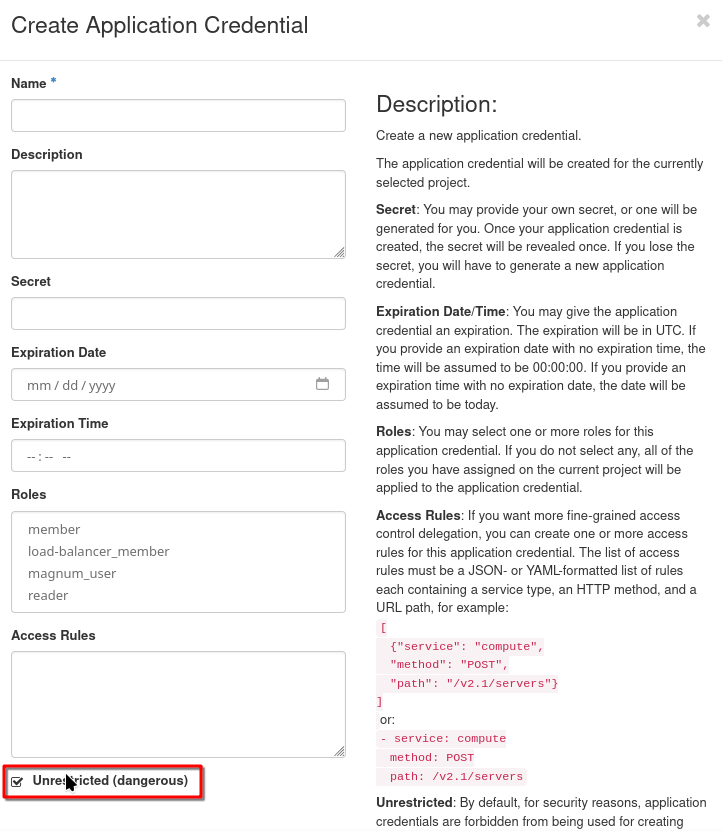

You can also use Horizon commands Identity –> Application Credentials –> Create Application Credential and check the appropriate box on:

Log in to your account using this unrestricted credential.

Prepare Your Environment

Work through article in Prerequisite No. 2 from which we will derive all the input parameters, using Horizon and CLI commands.

Also, authenticate through application credential you got from Prerequisite No. 4.

Configure Terraform for whitelisting

Instead of performing the whitelisting procedure manually, we can use Terraform and store the procedure in the remote repo.

Create file openstack_auth.sh

export OS_AUTH_URL="https://your-openstack-url:5000/v3"

export OS_PROJECT_NAME="your-project"

export OS_USERNAME="your-username"

export OS_PASSWORD="your-password"

export OS_REGION_NAME="your-region"

Create a new directory for your Terraform configuration and create the following files:

Note

This example is created for brand new Magnum cluster. You might have to adjust it a bit to suit your needs.

Create Terraform file:

main.tf

terraform {

required_providers {

openstack = {

source = "terraform-provider-openstack/openstack"

version = "1.47.0"

}

}

}

provider "openstack" {

use_octavia = true # Required for Load Balancer v2 API

}

variables.tf

variable "ID_OF_LOADBALANCER" {

type = string

description = "ID of the existing OpenStack Load Balancer"

}

variable "allowed_cidrs" {

type = list(string)

description = "List of IP ranges in CIDR format to whitelist"

}

terraform.tfvars

ID_OF_LOADBALANCER = "your-lb-id"

allowed_cidrs = [

"10.0.0.1/32", # Single IP address

"192.168.1.0/24", # IP range

"172.16.0.0/16" # Larger subnet

]

lb.tf

resource "openstack_lb_listener_v2" "k8s_api_listener" {

loadbalancer_id = var.ID_OF_LOADBALANCER

allowed_cidrs = var.allowed_cidrs

protocol_port = "6443"

protocol = "TCP"

}

Import Existing Load Balancer Listener

Since Terraform 1.5 can import your resource in declarative way.

import.tf

import {

to = openstack_lb_listener_v2.k8s_api_listener

id = "your-listener-id"

}

Or you can do it in an imperative way:

terraform import openstack_lb_listener_v2.k8s_api_listener "<your-listener-id>"

Run Terraform

Terraform Execute

terraform init

terraform plan -out=generated_listener.tf

terraform apply generated_listener.tf

Example output:

teraform output

Terraform apply generated_listener.tf

openstack_lb_listener_v2.k8s_api_listener: Preparing import... [id=bbf39f1c-6936-4344-9957-7517d4a979b6]

openstack_lb_listener_v2.k8s_api_listener: Refreshing state... [id=bbf39f1c-6936-4344-9957-7517d4a979b6]

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# openstack_lb_listener_v2.k8s_api_listener will be updated in-place

# (imported from "bbf39f1c-6936-4344-9957-7517d4a979b6")

~ resource "openstack_lb_listener_v2" "k8s_api_listener" {

admin_state_up = true

~ allowed_cidrs = [

+ "10.0.0.1/32",

]

connection_limit = -1

default_pool_id = "5991eacc-5869-4205-a646-d27646ccb216"

default_tls_container_ref = null

description = null

id = "bbf39f1c-6936-4344-9957-7517d4a979b6"

insert_headers = {}

loadbalancer_id = "2d6b335f-fb05-4496-8593-887f7e2c49cf"

name = "lb-testing-ih347dstxyl2-api_lb_fixed-w2im3obvdv2p-listener-t36tocd4onxk"

protocol = "TCP"

protocol_port = 6443

region = "<concealed by 1Password>"

sni_container_refs = []

tenant_id = "<concealed by 1Password>"

timeout_client_data = 50000

timeout_member_connect = 5000

timeout_member_data = 50000

timeout_tcp_inspect = 0

- timeouts {}

}

Plan: 1 to import, 0 to add, 1 to change, 0 to destroy.

Tests

By default, Magnum LB does not have any access restrictions.

Before changes:

curl -k https://<KUBE_API_IP>:6443/livez?verbose

[+]ping ok

[+]log ok

[+]etcd ok

[+]poststarthook/start-kube-apiserver-admission-initializer ok

[+]poststarthook/generic-apiserver-start-informers ok

[+]poststarthook/priority-and-fairness-config-consumer ok

[+]poststarthook/priority-and-fairness-filter ok

[+]poststarthook/storage-object-count-tracker-hook ok

[+]poststarthook/start-apiextensions-informers ok

[+]poststarthook/start-apiextensions-controllers ok

[+]poststarthook/crd-informer-synced ok

[+]poststarthook/start-system-namespaces-controller ok

[+]poststarthook/bootstrap-controller ok

[+]poststarthook/rbac/bootstrap-roles ok

[+]poststarthook/scheduling/bootstrap-system-priority-classes ok

[+]poststarthook/priority-and-fairness-config-producer ok

[+]poststarthook/start-cluster-authentication-info-controller ok

[+]poststarthook/start-kube-apiserver-identity-lease-controller ok

[+]poststarthook/start-deprecated-kube-apiserver-identity-lease-garbage-collector ok

[+]poststarthook/start-kube-apiserver-identity-lease-garbage-collector ok

[+]poststarthook/start-legacy-token-tracking-controller ok

[+]poststarthook/aggregator-reload-proxy-client-cert ok

[+]poststarthook/start-kube-aggregator-informers ok

[+]poststarthook/apiservice-registration-controller ok

[+]poststarthook/apiservice-status-available-controller ok

[+]poststarthook/kube-apiserver-autoregistration ok

[+]autoregister-completion ok

[+]poststarthook/apiservice-openapi-controller ok

[+]poststarthook/apiservice-openapiv3-controller ok

[+]poststarthook/apiservice-discovery-controller ok

livez check passed

After:

curl -k https://<KUBE_API_IP>:6443/livez?verbose -m 5

curl: (28) Connection timed out after 5000 milliseconds

What To Do Next

Compare with Implementing IP Whitelisting for Load Balancers with Security Groups on WEkEO Elasticity